My close friend and frequent collaborator, A. Wilcox, recently wrote a précis on the recent announcement of Linux dropping support for the Itanium microprocessor. At the time of writing, it hadn't been published, and wasn't as polished, but it did kickstart my own thought processes. While I'll address the implications (oh no!) of this deprecation has in a follow-up post, I'd like to start with a little history, for those of you who don't know of the Itanium.

Those of you who've read your history well may know some of the things below, and may not yet know some of the others. So - bear with me, and buckle up; we're going time-travelling.

Prelude

A History of Daring Ideas.

Once upon a time, there was a microprocessor manufacturer. In fact, they were the only microprocessor manufacturer, as they had dared to dream that one day, changing a computer’s nature might be done simply by reconfiguring its software – a stream of words feeding into a piece of sand that we purified and tricked into thinking.

In the late 1960s, a computer was the size of a large-ish piece of furniture, and required many many integrated circuits, crudely assembled into larger logic systems to work.

A man named Marcian Hoff, liaising with a calculator manufacturer for Intel to produce an electronic calculator, had a brilliant idea: to use the same core “computer” chip – what we now call an Arithmetic Logic Unit – with different software and different support chips – would allow it to be used in different calculators.

A different man, Stanley Mazor, joined Intel shortly after Hoff’s ideas had started percolating. He was able to reduce subroutine complexity by developing an interpreter that took larger instructions, and broke them down into simpler instructions that the CPU could use. This idea of a “decoder” frontend to allow for complex instruction sets to be used for programming simpler CPUs would remain throughout the decades, and is at the core of basically every CPU in consumer use today.

A third man, Federico Faggin, who would later become famous for leaving Intel to work at Zilog, and designing the much-beloved Z80 CPU, became responsible for making Hoff’s and Mazor’s ideas an electrical reality – a custom integrated circuit, capable of high levels of integration, and ready for incorporation into computing machines. He would later design the subsequent Intel 8080, which set Intel in good stead to ride the crest of the microcomputer revolution.

Busicom employee Shima Masatoshi had performed much of the initial logic design of what would become this groundbreaking CPU. Working with Faggin after having returned to Japan for some months, Shima would become a valued member of Intel’s design team until defecting to Zilog with Faggin to develop the Z80.

These four men developed the world’s first general-purpose CPU, in the way that we understand it today. That company was Intel, and that product was the 4004.

Once upon a time, there was a microprocessor manufacturer. This time, there were others – competition, even direct clones of this manufacturer’s parts, but this microprocessor manufacturer sold their CPUs for high prices, and were able to fund research and development into new and daring ideas to advance the state of the art.

In the early 1980s, serious computing was still done on computers the size of a large-ish piece of furniture, and the pioneering large scale integration work done by Intel had allowed these large computers to become even more powerful. And so, this manufacturer dared to dream. They dreamed that the advantages of these massive machines – the use of programming languages, instead of coding directly for the machine – and moving thirty-two bits of data at a time – might be brought to the masses.

Justin Rattner, and 16 other engineers, would try to push the limits of semiconductor technology to bring this dream to life. Even so, what we would consider today to be the CPU – what they called the “General Data Processor” – would require two integrated circuits to implement, and thus would be constrained by the limits of the wiring between them.

The tools built in software for this dream promised much, and in the end, delivered little. An immature Ada compiler (a language you’ve likely never heard of, and with good reason) doomed the idea from the start, and overengineered fault tolerance limited the architecture’s maximum performance.

That company was Intel. And you’ve probably never heard of the iAPX 432 either. But – Intel wouldn’t be destroyed by its failure. They would learn, and they would improve.

Once upon a time, there was a microprocessor manufacturer. In fact, it was the late 1980s, and there were many microprocessor manufacturers. Some remain today, though not making microprocessors; others still make computing devices. Many failed, and were bought for a pittance by larger firms for their engineering talent. Many more faded into the annals of history.

In the late 1980s, people were finding that you could do more than play Space Invaders and Tetris on these new-fangled “microcomputers”. They had gone from a curiosity for the technically-minded, or an amusement for children, to an important part of the workplace. The release of the IBM PC in 1981 had placed these computers in offices worldwide – nobody was ever fired for buying IBM, after all – and the release of the Macintosh in 1984 had placed a friendlier face on computing that was still able to do real work.

In the late 1980s, a company dared to dream, as before, that the power of a “real” computer could be brought to the masses. And this time, the technology was there to support it. John Crawford was responsible for bringing this dream to fruition. Multiple data types being directly supported by machine instructions, an extended instruction set, and an improvement in compiler maturity meant that the dream of the iAPX 432 could finally be realised – and as the cherry on top, users could upgrade without needing to learn an entirely new way of working with their computers.

That company was Intel. Their 80386, or more simply “386”, is fondly remembered to this day. It held the gold standard for performance for many years, and kickstarted the destiny of one Linus Torvalds, who once upon a time wrote part of an operating system – the lowest part, directly responsible for managing hardware – a “kernel” – that you’ve definitely heard of. Linux runs on any number of computer configurations, and is responsible for much of the internet’s function. And it all started on an Intel 386.

Linux support for the 386 was dropped in December 2012. The last kernel version to support it is kernel 3.7.10, released in February 2013.

Once upon a time, there was a microprocessor manufacturer. It was still the late 1980s, and microcomputers were still showing promise as serious computing tools.

In the late 1980s, that company dared to dream – of a supercomputer on a single chip.

This single chip had several 64-bit busses, to simultaneously fetch two 32-bit instructions. While marketed as a 64-bit processor, it was in fact a 32-bit CPU internally. It had added hardware to allow multiple CPUs to operate on the same data – a concept called “cache coherence”.

It was a modest success – because the easiest way to build a supercomputer was, and remains, to shove as many supercomputers in the same place as you can. And a supercomputer on a chip is a very small supercomputer indeed.

That company was Intel. The i860 was used up the late 1990s by the US military, by NASA, and by several vendors providing bespoke solutions for crunching very large numbers. Intel considered the i860 to not have been a commercial success.

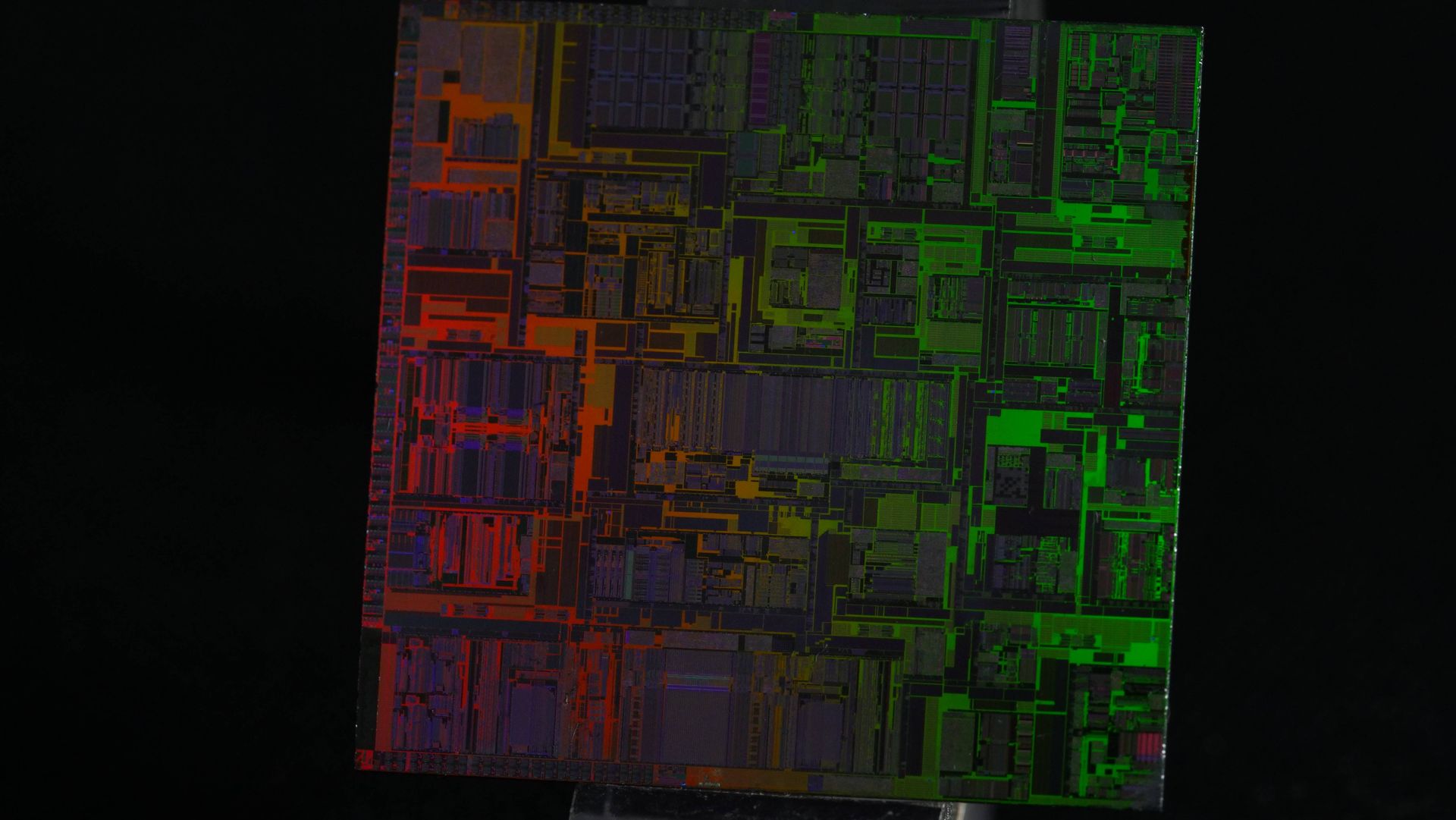

The i860 was the first integrated circuit in the world to exceed one million transistors on a single silicon die. It was a RISC CPU designed to use Very Long Instruction Words to explicitly run several tasks in parallel, or in a specific order. It was a gamble, and while not a complete failure, it never saw the widespread adoption Intel had hoped for.

Once upon a time, there was the microprocessor manufacturer. There were others, but if you thought of computer chips, you thought of them. They had built their brand carefully over the years, had navigated ups and downs as any company does. They had innovated, time and again; some innovations saw more success than others.

In the early 1990s, microcomputer technology was progressing at a breakneck pace – and this company dared, once more, to dream. To dream of a general-purpose microprocessor, which would once again set the standard for performance. An obscenely complex microprocessor, with an obscene price tag, and with such breathtaking performance that one would mutter obscenities when they saw it in use.

Having recently lost a court case regarding trademarks, this company gave their new fledgling child a name – the first time they had shipped a product with a name instead of a part number. And much like a ship so christened, the name would ring throughout history.

This CPU, like the previous runaway success, was designed for backward compatibility while providing a massive leap forward in performance. The CPU would have a superscalar architecture (that is, it had the ability to run several computations at the same time), an on-chip cache, a floating-point arithmetic unit on-die (making mathematical operations requiring high precision faster), and a branch prediction system (those several computations would produce the data required by the time it was needed, ideally.)

At around the time that this CPU launched, a gaming sensation hit the mainstream – Quake. Using floating-point arithmetic heavily, this CPU’s incredible performance made the game more technically wondrous than many attempted before; and Quake sold many CPUs for those who wanted to experience the tip of the spear in gaming performance.

You might not have heard of Michael Abrash, who developed the blazingly-fast routines that allowed Quake to dominate when paired with the right hardware; nor John Crawford, who co-led the project after the success of his 80386 – but I guarantee that you’ve heard of the Intel Pentium.

Once again, Intel dreamed big – and, by the combination of experience, maturing software support, and improved manufacturing technology, they created a sensation.

The Next Big Idea

The Itanium - next-generation computing, 90s-style.

Those of you with a good mind for pattern recognition might see how this story is going to play out, if you haven’t read the technical trades for the last two decades.

Once upon a time, there was a microprocessor manufacturer. That manufacturer was none other than Intel Corporation, and they were going to change the world.

In the very late 1980s, both Intel and Hewlett-Packard (who produced highly regarded scientific instruments, especially portable calculators and desktop microcomputers designed for laboratory work) started to investigate the possibility of developing computers using a Very Long Instruction Word architecture. In 1994, they banded together to produce a variant of this idea, which Intel named EPIC – Explicitly Parallel Instruction Computing. This name … was perhaps immature in its optimism. Hewlett Packard would design large parts of this new platform’s logical architecture, or ISA; Intel would be responsible for the electrical design and the marketing. Together, they aimed to release the first product of their unholy union – the Itanium – in 1998.

Intel, HP, and a myriad of experts believed that Itanium would first take over the 64-bit workstation and server space, then take over the market that Intel’s Xeon products served, of mid-tier server hardware, before becoming available to the general public for all applications. In May 2001, Intel launched Merced, the first Itanium product, making it available to OEMs HP, IBM, and Dell. Due to a combination of poor software compatibility, high cost, and, above all else, a lack of performance in the general-purpose computing space, Merced was a failure.

Unlike previous failures, this failure was one Intel had prepared for. The second-generation Itanium product, more heavily influenced in its design by Hewlett Packard, launched in July 2002 with the codename “McKinley”. Intel had been waiting for McKinley themselves, and in many respects primarily used Merced so that software vendors would be able to port applications and system software to run on real hardware.

It is worth noting that in April 2003, a plucky microprocessor manufacturer named American Micro Devices – you may have heard of them – developed an entirely different way to produce 64-bit workstations and servers. It was called the Opteron, and it’s the reason that free software projects targeting 64-bit x86 were referred to as “AMD64” before they became known as “x86_64”.

Opteron provided something that Itanium couldn’t – a low-cost pathway to 64-bit computing that retained performance commensurate with older 32-bit CPUs while running 32-bit code. When the AMD64 architecture reached the mainstream with the introduction of the Athlon 64, AMD had done something historically unprecedented. Instead of merely offering an evolution of an Intel technology, they had become a leader instead of a follower. Intel would themselves release a similar offering in the “Nocona” Xeon and 64-bit-enabled Pentium 4 and Pentium D – but their “Core 2” would be what brought Intel back into the fray as a CPU vendor.

Between the high cost of Itanium systems, the lack of software available even several years into Itanium’s availability, and the fact that AMD had come in to the fight with a steel chair, Itanium never stood a chance. Even having been repositioned into the High Performance Computing space, where it had the chance to truly shine, the ready availability of AMD and subsequent Intel 64-bit CPUs with better compatibility and lower cost kept Itanium relegated to a niche.

Itanium performs amazingly at tasks for which it is well suited. This sentence may make your head hurt, but I need you to understand it to understand my next point: Itanium is absolutely awful at general-purpose computing. You’re not going to run a Minecraft server on Itanium. You’re not going to run IIS or nginx or Apache (popular web servers) on Itanium – or if you are, they’re not going to outperform a contemporary Xeon or Core series CPU. As a mail server or a packet router, Itanium is wasted. If you want to model complex weather patterns, and you’ve written the software, an Itanium machine may be a worthwhile investment – it excels at complex arithmetical operations on large datasets. If you want to write a blog post like the one I’m writing now, you’d be better served by a Macintosh Performa from 1996 running Microsoft Word 5.1a.

Itanium had difficulty breaking into the market because it was largely irrelevant on launch. Sadly, Itanium is now relegated to a historical argument between academics. Hewlett Packard paid Intel to continue manufacturing and supporting Itanium processors – In 2008, they paid approximately 440 million US Dollars to Intel to continue production and updates through 2014. In 2010, another deal was made, and Intel received another 250 million USD to continue production through 2017.

In 2005, IBM ceased designing products around the Itanium processor. Later that year, Dell made the same decision, and their PowerEdge server products have since run quite happily on Xeon CPUs, using the 64-bit x86 implementation that AMD pioneered.

In 2010, Intel stopped producing compilers that targeted the Itanium chips. Earlier that year, Microsoft had announced that Windows Server 2008R2 would be their final operating system that supported the Itanium architecture.

Hewlett Packard, who showed their work, who demonstrated a theoretically worthy successor to both PA-RISC and SGI’s MIPS hardware, and a competitor to DEC’s Alpha platform, who believed they could kill PowerPC; Hewlett Packard, legendary name in scientific instrumentation and computing, backed the wrong horse.

PowerPC would fade into obsolescence not by the hand of Merced or McKinley, not by the brave new world of IA-64 (HP and Intel’s term for the Itanium 64-bit architecture), but by incremental improvements made to the Pentium-M, itself a low-power Pentium III. The Intel Core series of CPUs would replace PowerPC CPUs in Apple’s Macintosh products, after the same thermal and fabrication issues that plagued NetBurst and the Pentium 4 stalled development of IBM’s POWER4-based PowerPC 970 – Apple’s “G5”.

This year, 2023, it was announced that the Linux kernel would drop support for Itanium. There has been gnashing of teeth and wailing and lamentation – that this is the death knell for other niche architectures. The difference between Itanium and those is … you can still buy working hardware on the used market for SPARC, and Alpha, and Motorola’s 68000-series processors. Itanium never sold well, and the amount of hardware that has trickled down into the hands of hobbyists is tiny. And without people who can both keep an Itanium system functioning, and debug Linux kernel code, any hope of support vanishes. The last kernel version to support Itanium hardware will be this year’s longterm, the 6.6-series. It will remain supported for at least two years, and likely more.

While the general concern for removal of support for deprecated hardware in the Linux kernel is not entirely unfounded, which I will go into in a follow-up post, Itanium isn’t the standard-bearer for some computer hardware extinction event.

Itanium has died a thousand deaths. Many of Intel’s past failures died but once.